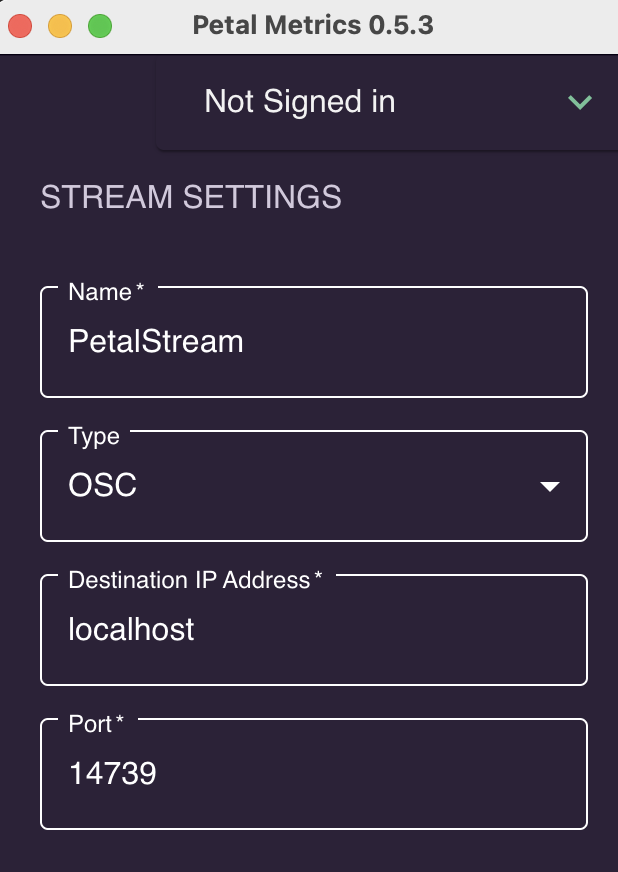

| The Muse can be connected to your computer using Petal Metrics: [https://petal.tech/downloads](https://petal.tech/downloads) This tool allows you to send the EEG data to your computer through OSC. Update 2024: this app is no longer free. |  |

| INPUT | NORMAL CODES | CODES WITH SHIFT (hold 1 player start) |

|---|---|---|

| COIN 1 | 5 | |

| COIN 2 | 6 | |

| START 1 | 1 | |

| START 2 | 2 | ESC |

| 1 RIGHT | R arrow | Tab |

| 1 LEFT | L arrow | Enter |

| 1 UP | U arrow | Key Below ESC (Volume, gamma, etc ) |

| 1 DOWN | D arrow | P (pause) |

| 1 SW 1 | L-ctrl | 5 (Coin A) |

| 1 SW 2 | L-alt | |

| 1 SW 3 | space | |

| 1 SW 4 | L-shift | |

| 1 SW 5 | Z | |

| 1 SW 6 | X | |

| 1 SW 7 | C | |

| 1 SW 8 | V | |

| 1 A | P | |

| 1 B | ENTER | |

| START 1 | 1 | |

| START 2 | 2 | Esc |

| 2 RIGHT | G | |

| 2 LEFT | D | |

| 2 UP | R | |

| 2 DOWN | F | |

| 2 SW 1 | A | |

| 2 SW 2 | S | |

| 2 SW 3 | Q | |

| 2 SW 4 | W | |

| 2 SW 5 | I | |

| 2 SW 6 | K | |

| 2 SW 7 | J | |

| 2 SW 8 | L | |

| 2 A | TAB | |

| 2 B | ESC |